Positioning, Messaging, and Branding for B2B tech companies. Keep it simple. Keep it real.

Two weeks ago, a CFO told Mark Stouse, “My go-to-market operation is bankrupt.”

Not struggling. Not underperforming.

Bankrupt.

This is where a lot of B2B tech companies quietly are right now. CAC keeps climbing. Deal size is down. Cycles are longer. And the default response is to replace tools, rebuild the dashboard, or layer AI on top of it all.

During our recent Causal CMO chat, Mark laid out a simple but uncomfortable truth: the tools aren’t the problem. The GTM model underneath them is.

And that’s a huge reason why B2B GTM remains stuck.

All software, including your entire martech stack, is codified logic. It embeds assumptions about how buying works, how leads behave, and what predicts revenue.

“All software is codified information. It represents the way we learn and process information. And that means it embodied the logic that went into B2B marketing and go-to-market, starting in about 2000-ish.”

That’s the deterministic gumball machine. You put a quarter in. A gumball comes out.

Apply that to B2B revenue and you get: fill the top of the funnel, closed deals come out the bottom.

The problem is that buying is human behavior. Not thermodynamics. Treating it as deterministic was always wrong. It “appeared to work” long enough that none of us had to face it. Now we do.

The reality is marketing has always been probabilistic. It has never been a linear deterministic process.

Here’s what happens when you build technology on top of flawed assumptions like “gumball” logic:

“Technology is a point of leverage for human activity. If you’re wrong in your logic, automating it for scale just means you create more crap.”

That’s why a lot of teams feel worse after modernizing their stack. More automation. More sequences. More scoring. More dashboards. More “confidence”. Less truth.

The tools worked exactly as designed. The design was the problem.

That design wasn't invented by marketers. It came from VC boards and investors who wanted predictability and a narrative they could control.

“The idea of a deterministic go-to-market machine originated with VCs.”

A lot of what GTM teams are being blamed for now was baked in from the top. The problem is conditions changed. For example, “no decision” now kills more deals than competitors do.

Old logic is exposed.

This is the part most GTM conversations skip.

B2B marketing hasn’t grappled with what it actually means to have been wrong. Not just tactically. Foundationally. And the proof is how AI is being deployed right now: layered on top of the same frameworks that already stopped working before GenAI became a thing.

“No one likes to hear this. I don’t like to hear it. But if we have the wrong tool, it’s because it has the wrong logic sequence. It’s embodying our logic sequence, the one that we told it to have.”

Here’s why this is important to understand if GTM teams want to fix the model:

“Accumulated knowledge and experience goes straight to the heart of our self-concept. As soon as you tell me that 20% of my knowledge is obsolete, I take that rather personally. When I realized that the price of learning is being wrong about what I thought I knew before, I became much more okay with it. Even if your response is, I’m just not going to learn anymore, you’re still wrong. You’re just wrong and frozen.”

Let that sink in for a moment.

Wrong and frozen is not a neutral position. It’s a career-ending one for GTM leaders who can’t explain to their CFO why results keep sliding.

And if you keep hitting a wall with your leadership team because they don’t want to hear the truth, it may be time to update your CV.

A causal model is what Mark calls “a digital twin of known reality”. It surfaces what’s actually driving outcomes, net of everything you don’t control. And it produces something most dashboards never give you: a stack rank of what’s working.

“You see things change places in that stack rank. If you’re in the bottom third, you need to kill it or figure out why.”

It also tells you time to value. Every tactic has a different lag to results. If you don’t know when something is supposed to pay off, you’ll either kill it too early or keep it too long.

This is where GTM leaders need to step up and call a spade a spade:

“Time lag allows you to set expectations accurately with your executive team. Let’s say, looking in advance, this is going to create a lot of value, but it’s going to take 16 months. If you come to me and complain at month 12, or month 10, I’ll point back and say, You agreed. Here’s your signature.”

That’s not a forecast. That’s a defensible commitment. There’s a difference.

A causal model doesn’t report on last quarter. It tells you where you are now, what's changed, and what route gives you the best chance of hitting your destination. Judea Pearl calls this causal engineering: not just what happened, but why, and what to do differently.

Mark equates this causal mindset to a GPS:

“It will start to say: you were on a really good route. But things have changed. This is not a good route anymore. In fact, you may need to switch cars.”

If the map is wrong, every route looks optimized. You’re still lost.

This shift in mindset is what GTM teams need to consider to get their models unstuck.

If your GTM is stalling, ask yourself these questions before you approve any new tool or campaign:

If the answers are unclear, you don’t have an execution problem. You have a model problem.

Start there. Write down your current GTM assumptions. All of them. Then ask which ones you’ve actually tested and which ones you inherited.

That’s the first step. It costs nothing but honesty.

More won’t fix it. Faster won’t fix it.

Fixing the logic fixes it.

Missed the session? Watch it here.

Mark’s full research is on his Substack.

If you like this content, here are some more ways I can help:

Cheers!

This article is AC-A and published on LinkedIn. Join the conversation!

By Gerard Pietrykiewicz and Achim Klor

Achim is a fractional CMO who helps B2B GTM teams with brand-building and AI adoption. Gerard is a seasoned project manager and executive coach helping teams deliver software that actually works.

If your AI plan does not start with a real business problem, it’s a hobby.

Write one page that says:

Then turn it into an OKR, so it survives meetings, churn, and Q2 priorities.

Someone says, “We need an AI strategy.”

Two weeks later you have:

That’s a common pattern.

This article is a follow-up to One-Page AI Strategy Template: Replace Roadmaps With Clarity, where we argued that your AI strategy should fit on one page.

This article shows you how to write it, then turn it into an OKR, then run it for 12 months without it turning into another forgotten “playbook” in your drawer.

Open a doc and answer the following four questions.

A strategy has to earn its keep.

Prompt:

Example:

If your diagnosis starts with “We want to use AI,” you’re already off course. AI is not a replacement plan. It’s an outsourcing plan for the first draft.

This section stops chaos.

You need two things:

AI helps you solve the problem. It does not solve it for you. It does not own the decision.

If you want a practical risk anchor, OWASP’s LLM Top 10 maps well to what breaks in real deployments (prompt injection, insecure integrations, unsafe output handling).

And if leadership wants a governance reference, NIST AI RMF and the GenAI profile give you a credible backbone without turning this into a policy manual.

Pick one metric that proves the policy worked.

Target:

Example:

Now add no-cheating metrics:

If those get worse, your “wins” are fake.

List the next 2–3 actions for the next 90 days.

Example:

If your first step is “create a committee,” you’re writing a plan to feel safe, not to get results.

This is where it becomes operational.

Google’s OKR guidance keeps it simple:

Objective

Your Primary focus becomes the Objective. Human. Clear. Directional. For example: “Use AI to reduce support load so our agents can solve customers’ hardest problems.”

Key Results

Your Target becomes the KR. Numeric. Time-bound. Auditable. For example: “Resolve 50% of incoming support tickets via approved AI by end of Q3, with 90% CSAT.”

Now each team can set supporting KRs that fit their world, but everyone works against the same top-level definition of success.

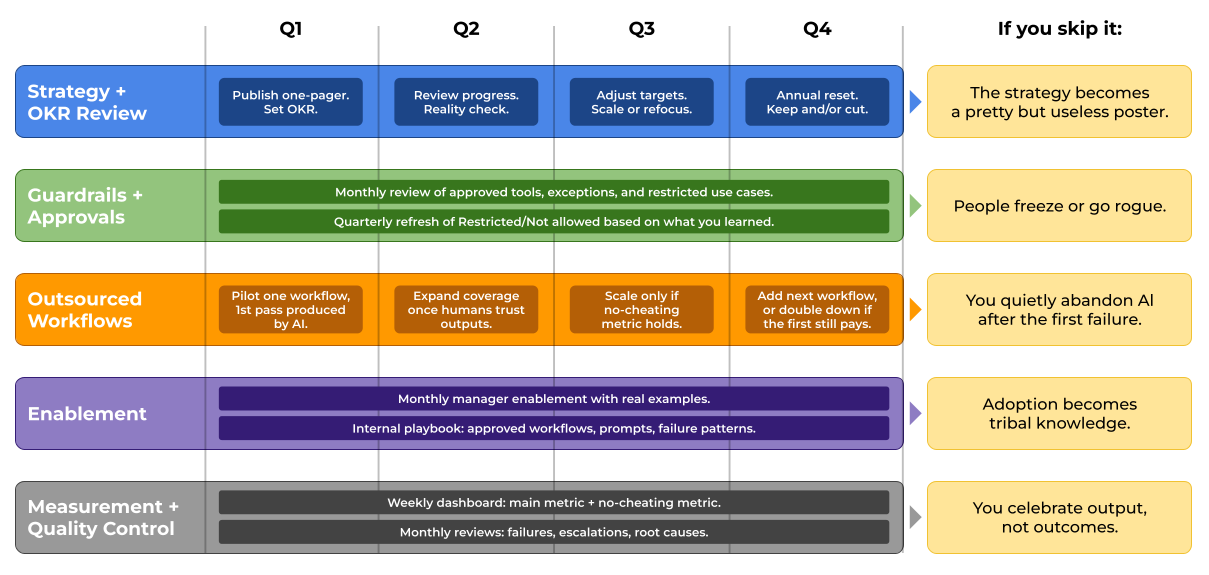

Here’s a 12-month timeline with five swimlanes. This cadence keeps your AI outsourcing plan alive after kickoff.

AI adoption fails because of a lack of planning and discipline.

People can’t connect it to a problem they actually own.

As we already covered in the previous article, one page fixes that.

An OKR keeps it alive.

A calendar makes it stick.

AI does not replace accountability. It exposes whether you have any.

If you like this co-authored content, here are some more ways we can help:

Cheers!

This article is AC-A and published on LinkedIn. Join the conversation!

Imagine you’re scuba diving.

Cruising along a reef wall, barely noticing the current. Then you try to turn back. You can’t. The current is too strong. You panic and your oxygen starts depleting… fast.

That’s GTM in 2026.

In our last Causal CMO chat, that’s the picture Mark Stouse painted as we talked about what happens when the market current flips.

“Doing more” won’t save you.

Like the scuba diver freaking out, it almost always makes the problem worse.

Mark’s scuba analogy explains what most dashboards ignore.

Think of “the current” as the externalities out of our control. The headwinds, tailwinds, and crosswinds that affect all aspects of the business, especially go-to-market.

When the current helps you, everything feels easy. Your plays work. Your oxygen lasts.

When the current turns, the same plays cost more and convert less. Not because your team forgot how to sell. Because you’re swimming against something bigger than you. Like buyer behavior.

The ugly part: the moment you need more oxygen is the moment the CFO wants to save air.

If we don’t model market conditions as part of GTM performance, we end up blaming our team for the current. We fire the wrong people. We keep the wrong plays. And wonder why the numbers keep sliding.

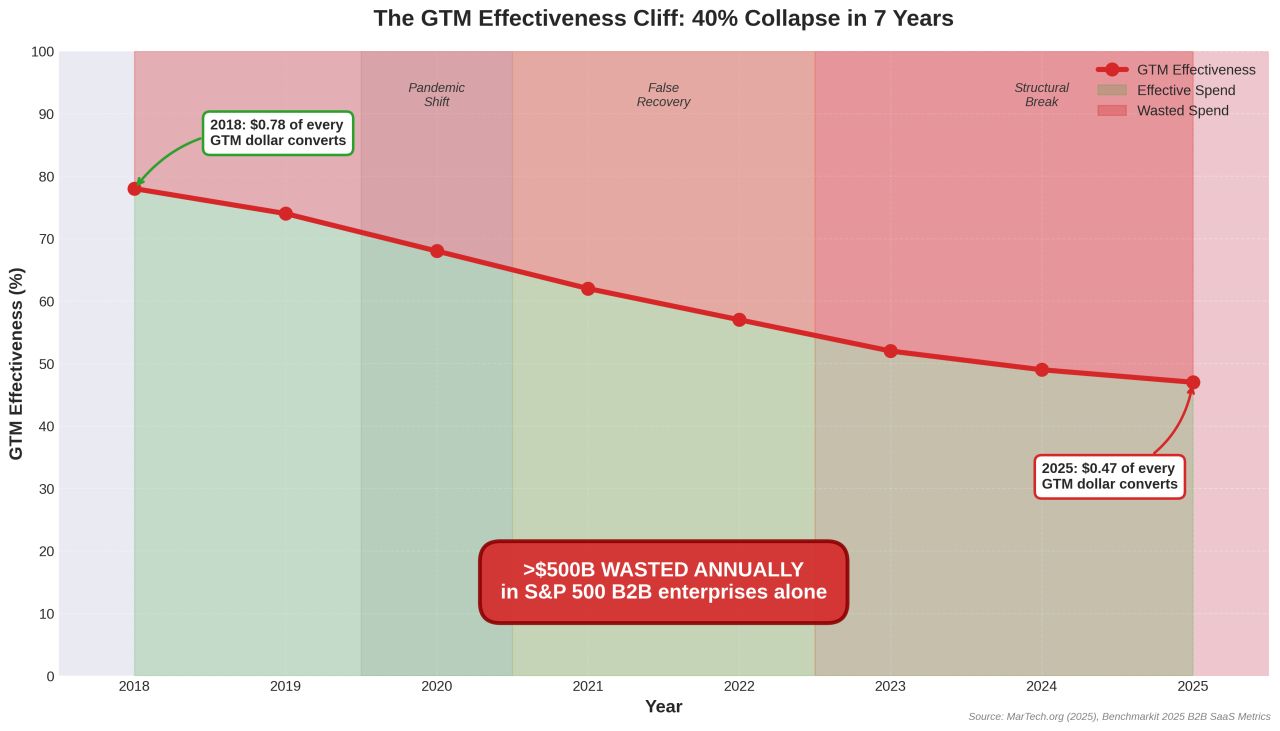

Mark shared some sobering data from his 5-Part Series on Substack: GTM effectiveness declined 40% between 2018 and 2025.

This is not a blip. It’s a systemic break.

Teams kept turning the crank on the same old frameworks while the environment changed underneath them.

Not because they are stupid. Because most GTM systems were built for stable conditions. They never accounted for headwinds, tailwinds, and crosswinds. They just assumed the water would stay calm.

So spend rises. CAC rises. Effectiveness drops.

Mark shared the uncomfortable truth:

70-80% of why anything happens is stuff we don’t control. We like to think we’re masters of the universe, even in some limited domain. But that’s a total fantasy. A fallacy.

GTM hits the wall first because it is the first part of the company that collides with the market. It’s “the canary in the coal mine.”

And when the canary chirps, you do not debate the air. You act. Ignore it and you lose the business.

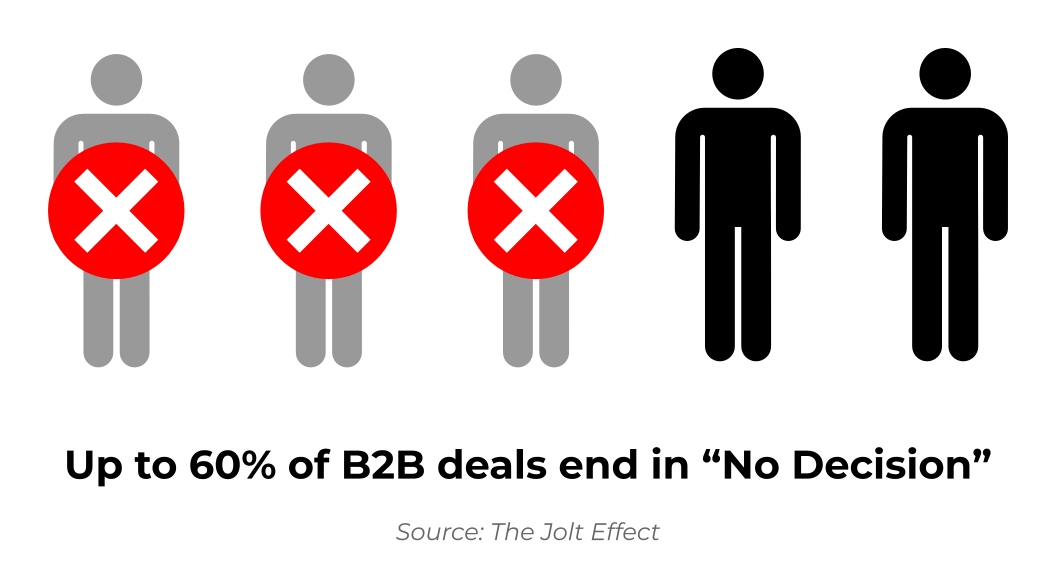

During our chat, I shared a recent real-world closed-lost analysis where over 60% of lost deals ended in “no decision.” That aligns almost perfectly with The JOLT Effect.

Not lost to a competitor. Not lost on price. Lost to inertia.

And Mark’s research shows as much as 4 out 5 deals are now being lost to no decision.

If your GTM motion is built to beat other vendors, but the real battle is against doing nothing, you’re burning oxygen on the wrong fight.

The data does not lie. It also sucks. You still have to face it before you can change it.

If you are not tracking “no decision” as a first-class outcome, you are guessing about why deals stall. And guessing is expensive when the current turns.

A lot of “process” exists because teams never did the hard work of naming what creates value right now.

Not yesterday. And not tomorrow. Right here. Right now.

Reality changes. Value changes with it.

A lot of reporting is political. Defensive. Built to justify money already spent, not to guide decisions.

That is how process becomes theater.

Lots of activity. Lots of slides. No increase in truth.

What should replace it?

Set expectations up front. Write them down. Then report against them.

Leaders avoid tight expectations because it invites judgment. But in 2026, clear expectations have become the position of highest trust.

Trust has lost the cartilage in the joint. Without it, everything feels bone-on-bone. That is what it feels like right now between CEOs, CFOs, CMOs, and boards when performance slips and nobody agrees on why.

Eventually you hit the “come to Jesus moment” where reality always wins. You stop trying to force the story. You deal with what is.

It’s painful. It’s also freeing. Nobody debates reality.

I asked Mark how he handles teams that resist forecasting because they “don’t have enough data.”

You will never have all the facts. Stop waiting for a condition that will never exist.

His approach is simple:

Bad assumptions do not just mess up tactics. They flow upstream into strategy. You can execute perfectly and still lose because you picked the wrong hill.

This is not rip-and-replace. Causal AI sits on top of what you already have. The hard part is not technical. It is how people think.

Most teams are trained to chase correlation. That habit creates confidence without proof.

The goal is straightforward: track reality now, track it forward, adjust as conditions change.

You might still arrive late. But you can explain why. You can document variance instead of hand-waving it. That changes decision-making. It also changes trust.

Mark shared a story about his physicist mentor who told him to “use what you know to navigate what you don’t know.”

It reminded me of Neil deGrasse Tyson:

Mark turned that into an operating mindset.

You have been behaving like a librarian, organizing what you already know. Be an adventurer. Care more about what you don’t know than what you do know.

In other words, stop defending the map. Start updating it.

If your GTM effectiveness is sliding and the default response is “more leads” or “more output” or “new tools,” pause.

“More” won’t save a bad model.

If your model of reality is based on yesterday (or what you hope it to be), your KPIs won’t guide you. They’ll distract you. They’ll keep you busy while you drift.

A few gut checks:

Most likely, your stack isn’t what’s broken.

Your model of reality probably is.

Missed the session? Watch it here

If you like this content, here are some more ways I can help:

Cheers!

This article is AC-A and published on LinkedIn. Join the conversation!

By Gerard Pietrykiewicz and Achim Klor

Achim is a fractional CMO who helps B2B GTM teams with brand-building and AI adoption. Gerard is a seasoned project manager and executive coach helping teams deliver software that actually works.

The CEO asks: “What’s our AI strategy?”

Most teams respond the same way. They spin up a working group, collect vendor lists, and crank out a 30-page deck with a three-year roadmap.

It looks impressive.

But it does nothing for Monday morning.

Your AI strategy should change decisions, habits, and outcomes inside the week. Otherwise it’s just theatre.

A strategy is not “we will use AI.”

That’s like saying “we will use spreadsheets.” It’s meaningless.

A real strategy starts with a business obstacle that hurts. Then it makes a clear choice about what to do next.

For example, in a recent boardroom meeting, we heard the following adoption target:

“20% of our workforce will use AI at least 10 times a day by the end of 2026.”

The point isn’t who said it. The point is what it measures: habits.

Not pilots. Not slide decks. Not “exploration.”

Using roadmaps for AI will answer the wrong question.

They answer: “What could we do with AI?”

Leaders need the opposite: “As an organization, how can we be more effective at solving our problems?”

As Michael Porter wrote, strategy is choice. It forces trade-offs. It forces you to decide what you won’t do.

Leaders create success frameworks. If you’re successful, the company will be too. These are the basics of OKRs.

AI roadmaps avoid that work. They hide weak choices behind more swimlanes.

Most people scan. They read the top, skim the left edge, and move on.

Nielsen Norman Group documented this behavior with eye-tracking research and the F-shaped scanning pattern.

So if your “strategy” needs a 30-minute read and a 60-minute meeting to decode it, it will die.

A one-page strategy survives because it can be:

Toyota has used the A3 approach for decades: put the problem, analysis, actions, and plan on a single sheet.

Lean Enterprise Institute describes an A3 as getting the problem, analysis, corrective actions, and action plan onto one page.

Same idea here.

One page forces clear thinking and clear communication.

Why? Because real strategy has three parts: diagnosis, guiding policy, measurable target.

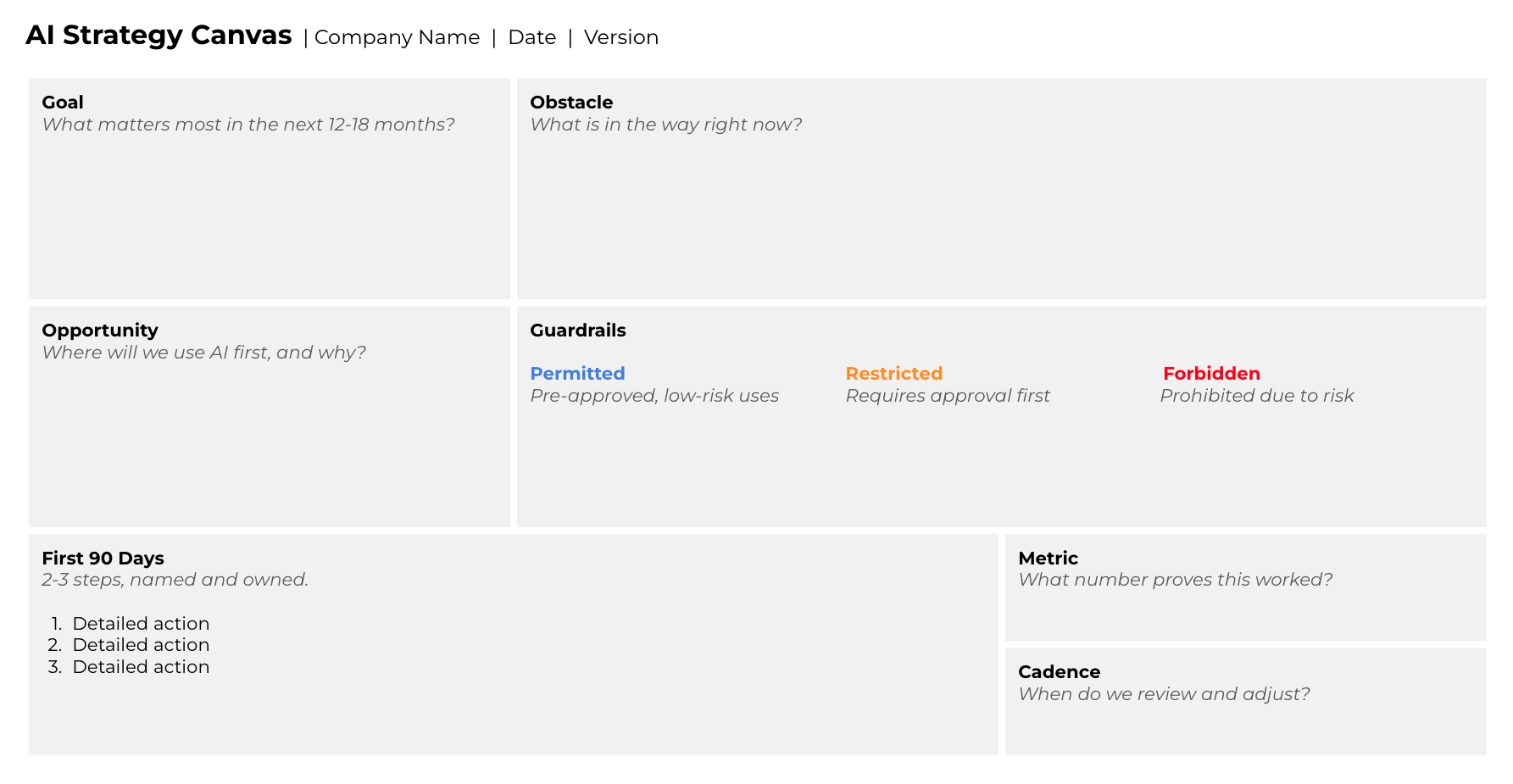

Make a copy of this One-Page AI Strategy Canvas (shown above).

Complete the first page (use the second page example if you’re not sure).

Do one draft only. Try not to tinker or wordsmith.

Then send it to three people who will actually challenge you: one exec, one operator, one skeptic.

Book a 30-minute call.

Your only job in that call is to tighten the choices.

Avoid committees and drawn-out timelines. They defeat the purpose.

Too often, companies get stuck here:

Simple policies beat long policies. The rule of three helps people remember every time.

Try this three-bucket guardrail approach:

Keeping things in buckets of three creates clarity.

Clarity turns “I’m not sure if I’m allowed” into “I know what I can do right now.”

Many teams already use AI at least occasionally, even when leadership thinks “we’re still figuring it out.”

Gallup reported daily AI use at work at 10% in Q3 2025 and 12% in Q4 2025, with “frequent use” (a few times a week) at 26% in Q4.

Strategy can’t live in a deck. It has to show up in habits.

Stop writing AI roadmaps nobody will read.

Start with a one-page strategy people can use.

In the next installment, Gerard and I will show you how to turn the One-Page AI Strategy Canvas into an OKR and keep it alive with a simple 12-month review cadence so it doesn’t become “write it once, forget it.”

If this was useful, forward it to one peer who’s drowning in “AI strategy” swimlanes.

If you like this co-authored content, here are some more ways we can help:

Cheers!

This article is AC-A and published on LinkedIn. Join the conversation!

Reality is like gravity. It doesn’t care what you or I think about it.

During last week’s Causal CMO chat, Mark Stouse laid out how many B2B tech leaders watch their GTM motion sputter into 2026.

Because here’s what’s true right now: Your CEO wants more MQLs. Your CFO wants proof. And the math says fewer than 1% of leads convert to closed deals in lead-centric processes.

That’s not a rounding error. That’s a broken model.

Mark began by making a critical distinction many of us miss.

He separated truth, fact, and reality.

“You have to be willing to see things as they really are. Not as you might want them to be.”

This distinction is important because most GTM conversations live in truth and taste debates or cling to outdated facts while reality gets ignored (actual win rates, deal velocity, churn drivers).

According to Gartner, 77% of B2B buyers say their last purchase was “very complex or difficult.” When buying gets harder, more leads don’t make buying easier. They just amplify existing problems in your product or sales process.

More also means more riff-raff and distractions. Quantity does not equal quality.

Pew Research found that when Google shows an AI summary, people click traditional search results 8% of the time vs 15% without one. That means your content can exist and still not get visited. Attention is rationed, trust is mediated by machines.

Mark didn’t mince words:

“It is accountability. It's provable, auditable accountability.”

If you can’t forecast outcomes, budgets tighten. Not because the CFO hates marketing. Because uncertainty costs money.

Mark reframed Opex entirely: it's not an “investment with ROI.” It’s a loan of shareholder money that must be paid back with interest. That means go-to-market spend that doesn’t actively improve revenue, margin, or cash flow is destroying the payback mechanism.

About 16-17% of your expertise becomes irrelevant each year. This isn’t age-related. It’s intellectual intransigence, as Mark put it.

The leaders who win will be “addicted to learning” (his words). Standing still means you’ll have the relevance of someone five years out of college within five years.

In practical terms, Mark put it this way:

“The more stuff in the door argument is an attempt to overwhelm an unpleasant reality under a sea of new, cool stuff.”

If your product is stale, churn is rising, sales cycles drag, or positioning is fuzzy, more volume turns into a megaphone for the problem.

“A great marketing effort on a crappy product or a crappy sales process only makes the company fail faster.”

But the deeper issue is if you’re solving for volume, your GTM structure is probably built for 2016, not 2026. Single-threaded MQL systems assume linear buying and individual decision-makers. The reality is buying involves committees, consensus, and risk mitigation. More leads don’t fix that. They will only make it worse.

Marketo co-founder Jon Miller echoes this too: measurement frameworks are breaking down, marketing is treated like a deterministic vending machine.

These aren’t separate problems. They’re symptoms of the same shift.

This isn’t a metric swap. It’s a system (and mindset) shift.

If you need something your CFO will accept, try this:

Forrester’s research backs this: multiple leads contribute to one opportunity, so treating a deal like it came from one MQL misrepresents how revenue actually happens. You’re changing how you think about the entire demand engine.

If you want a deeper dive, check out my 5-Part End of MQLs series.

OK. The following questions expose system problems, not just performance gaps.

Try to answer them as fast as you can (be honest):

If you answered “no” or “not sure” to more than two, you don’t have a lead problem. You have a reality problem.

And that reality problem most likely lives in your operating model, not your tactics.

Day 1: Pull 10 closed-won and 10 closed-lost from the last 6 months. Write the top 3 “why” drivers for each. One page.

Day 3: List where deals actually stall. Not CRM stages. Real moments. Internal disagreement. Budget reset. Security review. Implementation fear. Switching cost. Exec approval.

Day 7: Pick one friction point and fix it for one segment. A clearer proof pack. A tighter implementation plan. A risk-reversal story. A pricing simplification. A buyer enablement asset that helps an internal champion sell you.

Mark's advice on execution was practical:

“There is no requirement that you confront reality in public. Run a skunkworks. Learn. Make changes quietly.”

The point? You want traction without a re-org.

Interested in running a skunkworks project? Mark and I already covered that here.

If you’re clinging to MQLs, it’s most likely because you’re just trying to create certainty based on information you thought was fact and truth.

But once again, reality (like gravity) doesn’t care what we think about it.

Pick one “no” from the diagnostic above. Ask why until you hit a root cause. Then run a seven-day pilot that makes buying easier and risk feel smaller.

You can even use AI to help you. Check out how SaaStr did just that with their Incognito Test.

That’s how you close the reality gap. Not by fighting it, but by accepting it and working with it.

Missed the session? Watch it here

If you like this content, here are some more ways I can help:

Cheers!

This article is AC-A and published on LinkedIn. Join the conversation!

By Gerard Pietrykiewicz and Achim Klor

Achim is a fractional CMO who helps B2B GTM teams with brand-building and AI adoption. Gerard is a seasoned project manager and executive coach helping teams deliver software that actually works.

During a recent (and timely) Lenny’s Podcast episode, Jason Lemkin, cofounder of SaaStr, dropped a few jaws and rolled some eyes (just read the comments) with this:

He used to run go-to-market with about 10 people. Now it’s 1.2 humans and 20 agents. He says the business runs about the same.

That’s a spicy headline.

It’s also the least useful part of the story.

If you’re trying to adopt AI in GTM without breaking trust, below are field notes worth considering.

Jason’s most useful advice is not to “immediately replace your GTM team.”

It’s the Incognito Test:

You will probably find something broken. Like maybe support takes too long. Maybe the contact “disappears” in the CRM ether. Maybe your SDRs take three days to respond (or never).

Pick the thing that makes you the most angry. That’s your first agent use case.

One workflow. One owner. One month of training. Daily QA.

Then decide if you need a second, a third, and so on.

This is a pattern Gerard and I keep seeing too.

The real question isn’t “What’s our AI strategy?” It’s “What’s the smallest thing I can do right now that actually helps?”

Source: Lenny’s Podcast, 01:25:29

Two salespeople quit on-site during SaaStr Annual. No, not after the event… during.

Jason Lemkin had been through this cycle before. Build a team, train them, watch them leave, rinse and repeat. This time, he made a different call:

“We’re done hiring humans in sales. We’re going all-in on agents.”

Fast-forward six months: SaaStr now runs on 1.2 humans and 20 AI agents. Same revenue as the old 10-person team.

Source: Lenny’s Podcast, 0:11:52

Jason said this about SaaStr’s “Chief AI Officer”, Amelia* (a real person):

“She spends about 20%* of her time managing and orchestrating the agents.”

Every day, agents write emails, qualify leads, set meetings. Every day, Amelia checks quality, fixes mistakes, tunes prompts.

This isn’t “set it and forget it.” It’s more like running a production line. A human checks it, fixes it, and tunes the system so it does not drift.

Agents work evenings. Weekends. Christmas.

But if nobody’s watching, the system decays… fast.

And that’s the part vendors don’t put in their demos.

* Amelia is the 0.2 human who spends 20% managing agents. Jason is the 1.0.

Jason’s breakthrough came from a generic support agent. It wasn’t built for sales. It wasn’t trained on sponsorships. But it closed a $70K deal on its own because it “knew” the product and responded instantly at 11 PM on a Saturday.

That’s why this conversation matters.

Not because an agent can write emails. But because one can close revenue if you give it enough context and keep it on rails.

Source: Lenny’s Podcast, 0:07:53

Jason’s experience lines up with what Gerard and I have also seen in the field.

Start with a real workflow, not an “AI strategy”

Speed does not fix the wrong thing

Adoption is a leadership job

Whenever new tech comes along, almost everybody immediately wants the potential upside.

Almost nobody wants the governance.

It reminds me of the Dotcom boom. Everyone wanted a website. No one wanted to manage the content ecosystem (that’s still true today).

Agentic AI, like what Jason implemented at SaaStr, is no different.

Customer-facing GTM output carries real risk for your brand reputation. If your AI agents hallucinate, spam, or misread context, you don’t just lose a meeting. You lose trust.

And that’s the simple truth we keep coming back to:

The constraint is not capability. It’s trust. And trust is earned, not bought (or a bot).

In our AI adoption challenges article, we said tool demos hide the messy middle: setup, access, QA, and the operational reality of keeping this stuff reliable.

Jason’s story doesn’t remove that mess. It confirms it. He just decided the mess was worth automating.

Watch the full conversation: Lenny's Podcast with Jason Lemkin

If you like this co-authored content, here are some more ways we can help:

Cheers!

This article is AC-A and published on LinkedIn. Join the conversation!